Organizations can utilise data integration tools to access, integrate, transform, process, and transport data spanning multiple endpoints and any infrastructure to serve a wide variety of data integration use cases. In order to facilitate the building and deployment of data access and data delivery infrastructure for different data integration use cases, players in the data integration tools market provide a standalone software product (or solutions).

Supporting quicker time to value and less IT risk, these tools allow you to extract data, transform it in any style, and load (ETL) it to any system.

Every organization is developing a dependence on data warehousing nowadays, due to the ever-increasing volume of data. They require these ETL (Extract, Transform, Load) tools for this. These tools essentially extract data from all data sources, transform it into an intelligible format, and load it to a destination.

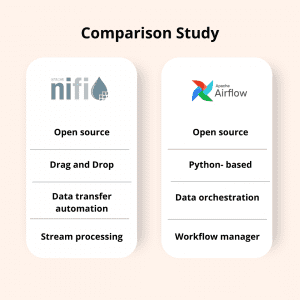

Now talking about Apache Airflow and Apache NiFi, both are ETL tools. They form a critical component of a modern data stack as they successfully integrate data across different databases and applications. And to choose the best one out of these two data integration tools, you must first find out what you are going to do with your data. Once you identify that, selecting the best tool for your business will become easy.

In this post, we will have a close look at two of the best ETL tools in the market and understand how they differ from each other. So, let’s begin.

Apache Airflow

Airflow is an open-source ETL tool that helps to schedule workflows in a programmed manner. The platform is primarily used for designing, developing, and tracking, workflows with ease.

Created by Airbnb in 2015, Airflow transitioned to Apache in 2016. Thereon, the tool has been extensively used with different cloud providers such as Azure, AWS, and GCP.

In general, Apache Airflow is an incredible task scheduler and data orchestrator tool that is most suited to routine tasks. It can perform a range of ETL jobs, track systems, send notifications, train machine learning models, complete database backups, and much more. However, it is not deemed fit for streaming jobs.

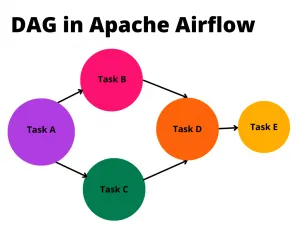

Apache Airflow is typically used to develop workflows that are represented as Directed Acyclic Graphs (DAGs). These DAGs are based on simple visualization elements. Thus, one can easily visualize data pipelines, track, and eliminate bugs. Also, the workflows are consistent and continuous, making them simple to manage.

Below is an example of a DAG in Apache Airflow:

DAG describes dependencies among the different tasks. In the above example, Task B & C depend on the completion of Task A. Similarly, Task E depends on the completion of all tasks leftwards.

Notable Features of Airflow

- Easy usability: Only basic knowledge of Python is enough to deploy Airflow.

- Highly compatible: Apache Airflow is compatible with Amazon AWS, Google Cloud, and many further.

- Python code: Airflow is based on Python. It has a PythonOperator that enables quick porting of Python code to production.

- Highly scalable: Airflow makes it easy to modify the library to fulfill the amount of abstraction that suits your context. It also allows for horizontal scaling.

- Task dependency management: Airflow perfectly manages all kinds of dependencies, whether it’s task completion, DAG running status, etc. It even handles concepts like branching.

Key Benefits of Airflow

- A rich and intuitive user interface

- Programmatic workflow management

- Creation of flexible workflows with Python and no other additional framework required.

- Python also allows for collaboration with data scientists.

- A highly active and constantly-growing open-source community

Limitations of Airflow

The only downside of this platform is that it is not suitable for stream jobs.

Apache NiFi

NiFi stands for Niagara Files. It is one of the best data integration tools that is designed to handle large amounts of data and automate data flow.

The open-source application is used to assemble programs from boxes visually and then execute them without performing any coding. Thus, NiFi is an excellent choice for those who need to integrate data from multiple sources but have no coding experience.

Apache NiFi can operate with various sources such as Hadoop, JDBC query, RabbitMQ, etc. It is an efficient tool to create scalable directed graphs of data routing and further sort, modify, enhance, combine, split, and verify data.

The application is best suited for long-term jobs. Unlike Apache Airflow, it is great for processing streaming data and periodic batches. One of the best aspects of NiFi is that it is not restricted to extracting data from CSV format files only. In fact, it can process data from images, videos, and audio as well.

Notable Features of NiFi

- Highly configurable: NiFi is highly configurable that enables users to achieve guaranteed delivery, low latency, high output, dynamic prioritization, and effective back pressure control.

- Web-based UI: The application offers an easy-to-use UI that ensures the seamless design, control, and feedback monitoring without any hassle.

- Built-in monitoring: NiFi uses a smart data provenance module to monitor and track data from the start to the end of the flow. This ensures effective compliance and troubleshooting.

- Support for secure protocols: It offers support for protocols like SSL, SSH, HTTPS, and many other encryptions, making it a highly secure framework.

- Effective user & role management: Administrators can set limitations on specific users for viewing or modifying policies, retrieving details, accessing different functions, and more.

Benefits of NiFi

- Supports live batch streaming

- Facilitates buffering of queued data

- Highly scalable and extensible platform

- Users can command and control the application visually without any coding

- Data provenance supports great error handling

Limitations of NiFi

- Creates difficulty with scaling. For instance, if you have to copy the entire pipeline to a different setting, you will have to go back to the UI and recreate the settings.

- NiFi is not suitable for setting up manually handled jobs

Wrapping Up

Both Apache Airflow and Apache NiFi are efficient data integration technologies that are winners in their own right. While Airflow is more of a data orchestration framework, NiFi works to automate the transfer of data between two systems. And comparing the two tools is like comparing apples to oranges.

The ultimate choice depends on your precise project requirements. For instance, if your main objective is Big data handling, i.e. extracting it and loading it to a different space, you must opt for NiFi. This ETL tool is also a great choice if you do not wish to get into coding as NiFi has a simple drag-and-drop-based interface.

On the other hand, Airflow is the ideal ETL tool for setting up dependencies, scheduling tasks, and managing programmatic workflow. The tool is good for organizing workflows and delivering data-driven insights to help your business grow.

At Algoscale, we help you address the challenge of scattered data as we create ETL processes that load data from source systems into the data warehouse after cleaning it. Put this clean data to further use to measure KPIs and other metrics to derive insights. Get improved and consistent data quality, quick response time, and high ROI with Algoscale.