In today’s highly competitive world, data is the élan vital for every organization. These organizations are using data to discover trends, gather insights, and react to customer needs in real-time.

Predictive analytics and automation are no longer held in reserve for unicorns alone and those organizations that respond quickly to market opportunities are more likely to gain an edge over their less data-driven opponents. And one tool that is making all of this possible is Apache Kafka.

As per the Kafka website, 80% of all Fortune 100 companies use Kafka. In fact, the tool is an integral part of the tech stack at Uber, LinkedIn, Netflix, Spotify, Twitter, Pinterest, Airbnb, and Cisco.

Essentially, Kafka is an open-source data streaming platform. The tool helps to ingest and process data in real-time that is constantly generated by thousands of data sources.

Used by companies across the world, Apache Kafka efficiently manages the constant data influx and processes all this generated data sequentially and incrementally. Sounds incredible, right? In this post, we will help you get familiar with the tool as well as enlist all the possible Apache Kafka use cases. So, let’s begin.

Apache Kafka Use Cases

Apache Kafka is a brilliant tool that enables organizations to modernize their data strategies. Discover the most popular Kafka use cases across a wide range of industries.

- Tracking User Activity

This was the original use case for Apache Kafka. First developed by LinkedIn in 2008, the tool was built to be used as a user activity tracking pipeline. Activity tracking is generally very high volume as each user page view produces several activity messages, known as events.

The most common events include user clicks, likes, registrations, orders, time spent on specific pages, etc. These events are then published to dedicated Kafka topics, which are either loaded into a data warehouse or used for offline processing and reporting.

LinkedIn uses this tool to manage user activity data streams. This technology reinforces several LinkedIn products, such as LinkedIn Today and LinkedIn Newsfeed.

- Real-time Data Processing

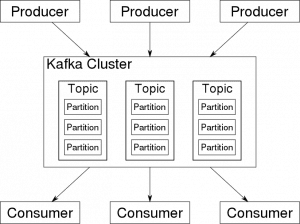

Real-time data processing is another one of the critical Apache Kafka use cases. There are plenty of advanced systems that necessitate data to be processed as soon as it becomes available. In these systems, the processing pipeline comprises multiple stages, where raw and unstructured data is consumed from Kafka topics and then accumulated, enriched, and transformed into new topics for further consumption or follow-up processing.

Here is a Kafka example for real-time data processing. Netflix uses the tool for data processing. As pointed out in Netflix’s TechBlog, the company uses a Keystone pipeline to process billions of events per day. And Apache Kafka clusters are an integral part of the Keystone Data pipeline that facilitates seamless stream processing.

- Commit Log and Log Aggregation

With the quick rise in distributed systems, managing or accessing logs has become a difficult task for several organizations. The tool serves as an external commit log for a distributed system, representing another one of the Kafka use cases. This commit log replicates data between nodes and acts as a re-syncing mechanism for any abortive nodes to restore their data.

Moving on, many organizations also use Apache Kafka as a replacement for a log aggregation solution. Generally, a log aggregation tool gathers physical log files from multiple servers and brings them together in a single centralized place for processing. Kafka extracts all file details and provides a cleaner abstraction of event data or logs as a stream of messages. This, in turn, enables organizations to achieve lower-latency processing, hassle-free distributed data consumption, and support for multiple data sources.

Twitter, the highly renowned social networking platform, makes use of Kafka in a similar way. It deploys Kafka in its ML logging pipeline which decreases the entire pipeline’s latency from seven days to just one day.

Below is an image showcasing Titter’s ML logging pipeline:

- Messaging

This is another one of the Apache Kafka use cases where the tool works as a replacement for a traditional message broker. In other words, it is used to process and arbitrate communication between two applications.

Kafka fares much higher in comparison to other messaging platforms as the tool offers better throughput, requires low latency, and has built-in partitioning, data duplication, and fault-tolerance capacity. And all these attributes make it ideal for large-scale message-processing applications.

One Kafka example of this use case is Pinterest. The highly popular image-sharing app gets over 200M monthly users. And every repin, click, or photo enlargement results in Kafka messages. The app uses Kafka streams to manage all this as well as for content indexing, spam detection, and recommendations.

When to Use Kafka?

Apache Kafka is messaging software that allows you to subscribe to streams of messages. But apart from being a message broker, the tool works well in many other scenarios.

For instance, your organization might have several data pipelines that facilitate seamless communication between multiple services. However, if plenty of services communicate with each other in real time, the entire architecture becomes complicated, requiring you to deploy several protocols and integrations. In such a case, Apache Kafka can help to decouple such complicated data pipelines and streamline software architecture to make tasks more manageable.

When Not to Use Kafka?

Given Kafka’s scale and immense scope, several organizations prefer to integrate the tool into their tech stack. However, the tool has certain limitations, including its overall complexity, that makes it not so ideal for the following scenarios:

- Where there are low volumes of data

By design, Apache Kafka is meant to handle huge volumes of data. And it is an unnecessary investment if you use it to process only small amounts of events or messages each day. For such scenarios, using traditional message queues such as RabbitMQ is a much better choice.

- As a processing engine for ETL jobs

ETL jobs require you to perform data transformations on the fly. In such scenarios, using Kafka is not always advisable. Although Kafka has a streaming API, it requires organizations to build complicated interaction pipelines between consumers and producers and then maintain the entire system. And all this requires a considerable amount of work.

Summing up Apache Kafka’s Use Cases and How Algoscale Can Add Value

Kafka as a distributed streaming platform is among the most reliable tools available nowadays. Kafka use cases demonstrate that it is an ideal tool for organizations looking to process real-time data efficiently. The tool enables engineering teams to leverage their scalable abilities and boost performance to meet the modern demands of the customers.

At Algoscale, we help you reinvent your business with the Apache Kafka event streaming platform. Our proficient Kafka consultants and developers have demonstrated expertise in developing solutions that handle massive amounts of data streaming from multiple sources and distribute them across clusters.