In most organizations, information is often dispersed across various departments, platforms, and individuals, leading to a fragmented landscape. This landscape creates significant challenges to accessing valuable insights in real-time.

Additionally, the dispersion of knowledge creates inefficiencies, delays in decision-making, and impediments to collaborative efforts. Critical data might be buried in emails, stored in disparate databases, or confined within the minds of employees, making it difficult to harness the full potential of organizational intelligence.

At Algoscale, we sought to eradicate this issue by implementing a large language model (LLM) powered by retrieval augmented generation (RAG), leading to a transformative solution.

Overview of RAG LLM

Large language models (LLMs) have drastically revolutionized information interaction. Trained on vast datasets, they demonstrate proficiency in generating text responses that possess broad applicability to user inquiries.

However, these models come with their fair share of challenges and limitations. The information that is used to generate a response is limited to the information used to train the LLM, which may be weeks, months, or years out of data. In corporate settings, LLMs may lack specific information about the organization, leading to inaccurate responses or ‘hallucinations’ (fabricated answers).

Retrieval Augmented Generation (RAG) technology, integrated into LLM applications, effectively addresses this challenge by extending the model’s capabilities to incorporate specific and up-to-date data sources. This optimization ensures that the generated responses are not only contextually relevant but also tailored to the unique needs of a particular organization or industry.

Moreover, RAG facilitates source citation within LLM outputs, enhancing accountability by providing references. This ensures complete transparency regarding the origins of crucial data used in generating responses.

Case Study: Algoscale Implementation

At Algoscale, we faced a significant challenge with scattered information across various departments. Vital documents were dispersed across different platforms, making it cumbersome for employees to access crucial information promptly.

This lack of centralized access resulted in delays, communication gaps, and inefficiencies in decision-making processes. Critical data, essential for daily operations and decision support, was often buried in department-specific repositories, hindering collaboration and obstructing the seamless flow of information across the organization.

Recognizing the pressing need to address this information silo challenge, Algoscale embarked on an innovative experiment. The company decided to leverage the capabilities of RAG-powered LLM to streamline internal document access and management. This involved integrating RAG LLM with a Slackbot, creating a powerful tool to facilitate real-time, contextually relevant information retrieval.

The aim was to empower employees with the ability to access pertinent documents directly within their familiar communication platform, Slack. This strategic decision aimed not only to alleviate the challenges associated with scattered information but also to enhance workplace efficiency by providing instant access to accurate and up-to-date data.

What Can Algoscale’s Slackbot Do?

Algoscale’s Slackbot can perform a variety of tasks for its users, such as:

Answer questions

The Slackbot can quickly answer user queries on various topics related to Algoscale’s expertise. For instance, it can answer questions like ‘What are the key trends in data warehousing?’ or ‘What is data integration in data mining?’ The Slackbot can provide detailed responses depending on the user’s preference along with citing the sources.

Streamline access to internal documents

The Slackbot serves as a centralized hub for document access, facilitating streamlined access to internal documents across different departments. Users can now effortlessly retrieve pertinent information directly within the Slack platform, eliminating the need to navigate through disparate systems or search through multiple repositories. This not only saves time but also ensures that critical documents are readily available for improved decision-making and collaboration.

Generate reports

The Slackbot can generate reports on demand, providing users with consolidated and organized insights. Whether it’s compiling data from various sources or summarizing key metrics, users can customize reports as per their specifications like format, length, and style.

Interact with internal as well as external systems to take actions using AI agents

One of the standout features of the Slackbot is its capacity to interact seamlessly with both internal and external systems. Through integration with AI agents, it can perform a range of actions, automating tasks and responses. This includes interacting with internal databases, triggering workflows, and even connecting with external systems to gather or disseminate information.

Its results and benefits:

Improved information retrieval

The implementation of Algoscale’s RAG LLM-powered Slackbot has significantly elevated the speed and efficiency of information retrieval within the organization. Employees now experience streamlined access to relevant documents and data, leading to quicker decision-making processes. The improved search functionality ensures that critical information is at their fingertips, fostering a more agile and informed workforce.

Reduced cost with reduced need for HR personnel to answer team’s queries

A noteworthy outcome of the RAG LLM implementation is the reduction in the need for HR personnel to address routine queries from teams. The Slackbot’s ability to provide instant and accurate responses has alleviated the burden on HR professionals, allowing them to focus on more strategic and complex aspects of their role. This not only translates to cost savings but also contributes to a more efficient allocation of human resources within the organization.

Enhanced collaboration

The Slackbot has played a pivotal role in breaking down silos and promoting cross-departmental knowledge sharing. By serving as a centralized platform for information exchange, it has facilitated collaboration among teams, fostering a culture of transparency and shared insights.

Boost workplace efficiency

Faster access to information, reduced dependency on manual interventions, and improved collaboration collectively contribute to a more productive work environment. Tasks that once required extensive time and effort are now streamlined, allowing employees to focus on strategic initiatives and innovation, ultimately driving the organization toward its goals.

Implementation

OpenAI GPT model

Our RAG LLM is based on the OpenAI GPT model. Leveraging the advanced natural language processing capabilities of OpenAI GPT, the Slackbot was equipped to understand and generate contextually relevant responses. The integration of this cutting-edge model ensured that the Slackbot not only comprehended user queries effectively but also delivered coherent and accurate information, enhancing the overall user experience.

Used LlamaIndex & LangChain

The experts at Algoscale strategically employed LlamaIndex and LangChain to augment the functionality of their RAG LLM-powered Slackbot. LlamaIndex, known for its powerful indexing capabilities, played a crucial role in organizing and retrieving vast amounts of internal data efficiently. Meanwhile, LangChain, with its language processing capabilities, enhanced the contextual understanding of queries, contributing to more accurate and nuanced responses.

Solution Architecture

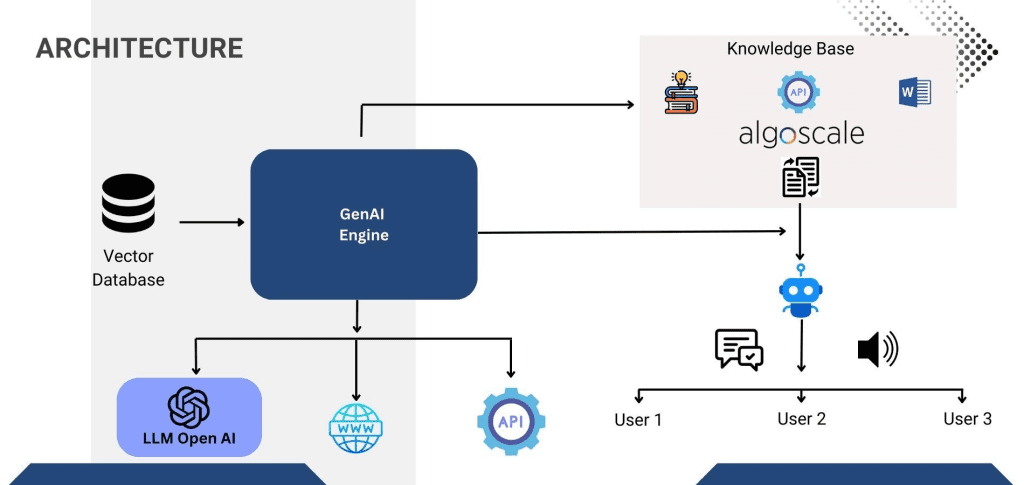

Algoscale’s solution architecture seamlessly integrated OpenAI GPT, LlamaIndex, and LangChain. This cohesive blend facilitated efficient data retrieval and contextual understanding within the Slackbot. Here is what it looked like:

Conclusion

Algoscale’s implementation of RAG LLM along with the innovative Slackbot has revolutionized information management throughout the organization. It has significantly enhanced workplace efficiency and knowledge accessibility, leading to a more agile work environment.